Comfy with Python basic tooling, now what?

pdb, mypy, pyright, black, autopep8, flake8, ruff, pylint...

Summary

Classic situation: you have leveled up, now you can use better gear.

If you know pip, venv and startup scripts, what comes next?

You should definitely start with adding a better shell to your toolbox, such as ipython and jupyter. The next step is getting friend with a debugger, preferably pdb first.

From there, formatters like black and ruff will give you fantastic value and make your code beautiful. Well, maybe not beautiful, but at least normalized.

If you want to up your game in code quality, or simply if you are sharing the source ownership in this weird congregation called "a team", linters will ensure a minimal code quality level. Very minimal. But real nonetheless. It's purely surface level, as there is no linter for being an idiot. Yet.

Then for code for which you wish to ensure a certain degree of robustness, a type checker will be the guardian of the temple of data congruency. It will help you detect when you try to solder a window on a submarine, or put an ice cream in the oven.

And if you reach the point where you have to write tests, a good test runners will make that easier on you.

Finally, since running all that stuff is a pain in the butt, a task runner will hide all that complexity behind nicely defined aliases.

Remember you don't need to use all those tools. They are here to help you reach your goals, they are not checkboxes to tick.

When you are ready

How do you know you need new tools? It's not like there is a XP bar next to your username at the login screen. Would be handy though.

Well, first, there are priorities. There is no use to start adding tooling if you don't know the basics. You should be comfortable with pip, venv, and Python startup scripts. Having a good understanding of imports is also important and often neglected. That's the bare minimum, the essential you have to master to be productive in Python.

Once you are there, it's a matter of what you want. If you want easier code to read from now on, investing into a formatter and a linter make sense. If you feel like it's time to trade flexibility for robustness, a type checker is something to try.

You don't have to do any of those things. You can code for years without them. It's just at some point, you will start to want them.

Generally, though, I would invite you to start your journey with a new shell, because it makes progressing and testing things out easier. And to master at least pdb, since a programmer ends up spending a lot of time trying to figure out what goes wrong.

Shell

If you run Python without any argument, it will start a Python shell in which you can interactively execute any Python code. While it contributed immensely to the popularity of the language, the default experience is pretty limited: no completion, black and white text, no block management...

But once you are getting more and more into code, exploring new things is a must, and a shell is great for this because the feedback loop is super short. Getting a better shell will make you want to use it more, and will allow you to practice with libs you don't know or dissect some data source you are unfamiliar with in a jiffy (I heard the inventor of the word said it’s pronounced giffy BTW).

For the terminal, there are several Python shell alternatives out there, like bpython or ptpython.

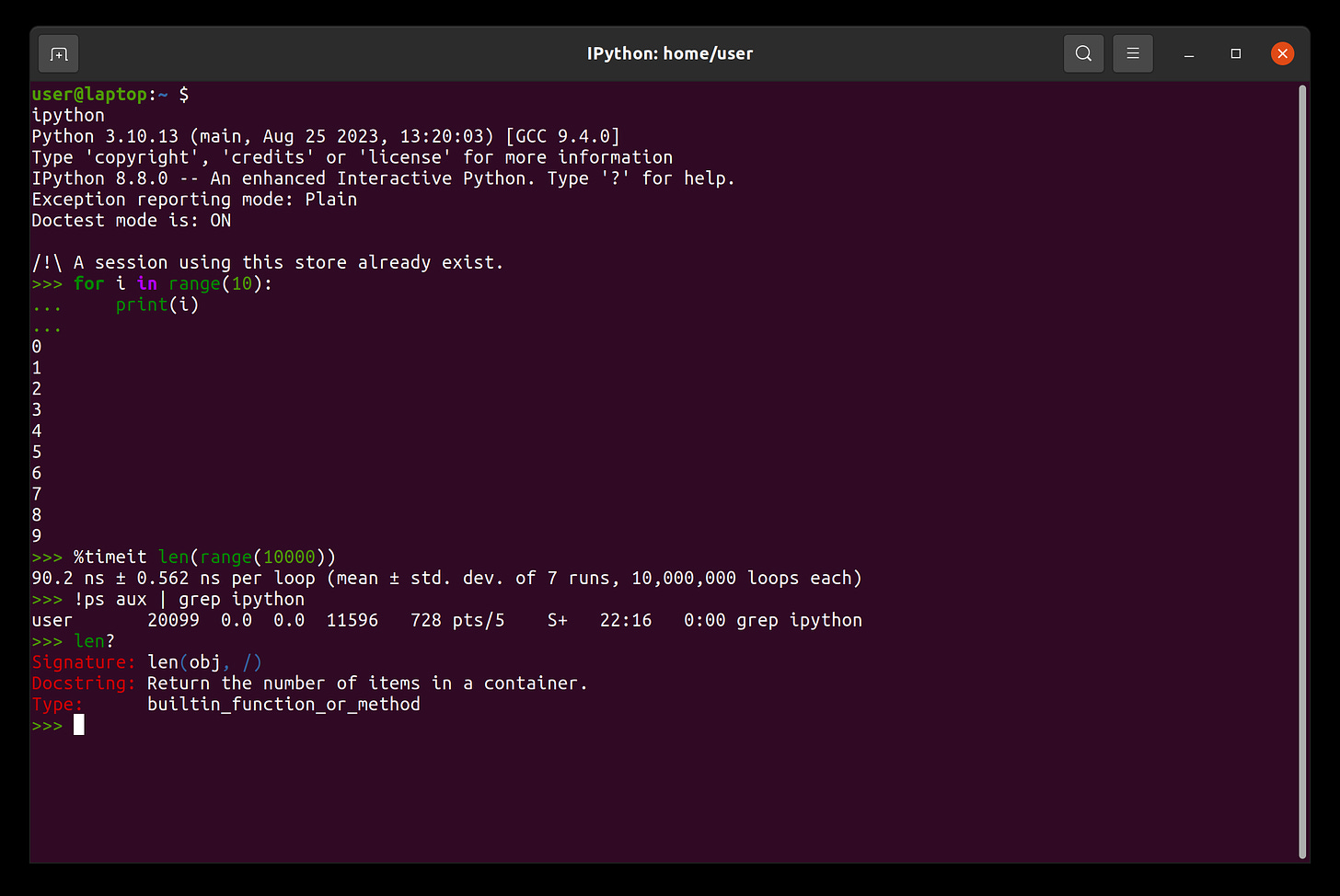

Nevertheless, I would recommend ipython: it's simple to use, yet powerful, packed with features, and the most battle tested.

Using it, you will get:

Syntax highlighting.

Full block handling.

Plenty of handful shortcuts like "

?" to get the help on an object.Code completion.

Magic commands like "

%timeit" to easily measure the time code takes to run.

And much more. Although you can ignore most of it at first, and just enjoy how clearer and less painful it is to use.

If you want to break free from the terminal, jupyter is your friends. It's a mix between a shell and an editor, and it will give you all the goodies of Ipython, with a mouse and a documented oriented UI.

Debugger

Yes, I know, you use print() to debug. Me too. Everybody does. All. The. Time.

But some problems are hard to solve with just print(). The next step is logging, of course, but even that will fall short at some point.

You will need a debugger.

There are so many debuggers it's not even funny. Many editors, like PyCharm and VSCode, come with their own, but some graphical ones are stand alone.

Yet, as I already wrote in this blog, I think Python devs should start to learn pdb first:

it's always with you because it ships with Python;

it works anywhere, even on a server without a GUI or through SSH;

it's there no matter your stack, OS or IDE;

it's fast;

and if you know how to use it, all other debuggers will be easy to use: what you learn on PDB maps to any other product.

So if you are not already pdb fluent, jump on our tutorial.

If you are, you may be interested in upgrading pdb a little. There are packages like pdb++ and pudb that will increase the comfort of your debugging-in-the-terminal experience by a lot.

Personally, ipdb has my vote, because its mixing ipython with pdb, two tools you should know anyway. So you capitalize on your skills.

That doesn't mean you shouldn't use the excellent debuggers provided by your editor, only that you should know to do without.

Formatters

Formatters are low productivity hanging fruits, and pretty much any code, no matter the size or the complexity, benefit from them.

They save you the trouble of having to manually format as you type, and let you focus on the logic, not where to insert a space to make the file look pretty. On top of that, they unify the flow of instructions in the entire project, which means it will get easier to navigate and scan.

There are old school formatters with a lot of bells and whistles, like autopep8 and yapf, but I'm going to be that guy, and say you should just use black.

Black has more or less become the de facto standard for open source Python projects. Because of this, you will feel at home almost no matter the source you look at because it will match the style of yours.

Black has close to no settings, which means you will hate its formatting sometimes. However, for this price, you will remove endless debates about formatting, what eventually often boils down to taste and preference, something that cannot be objectively measured.

By default it respects most of PEP8, which is the reference document on Python style, and will try to limit the size of git diff. It's simply a very good tool, no matter how annoying some format it may sometimes choose.

Set it to format your doc on save, and forget it.

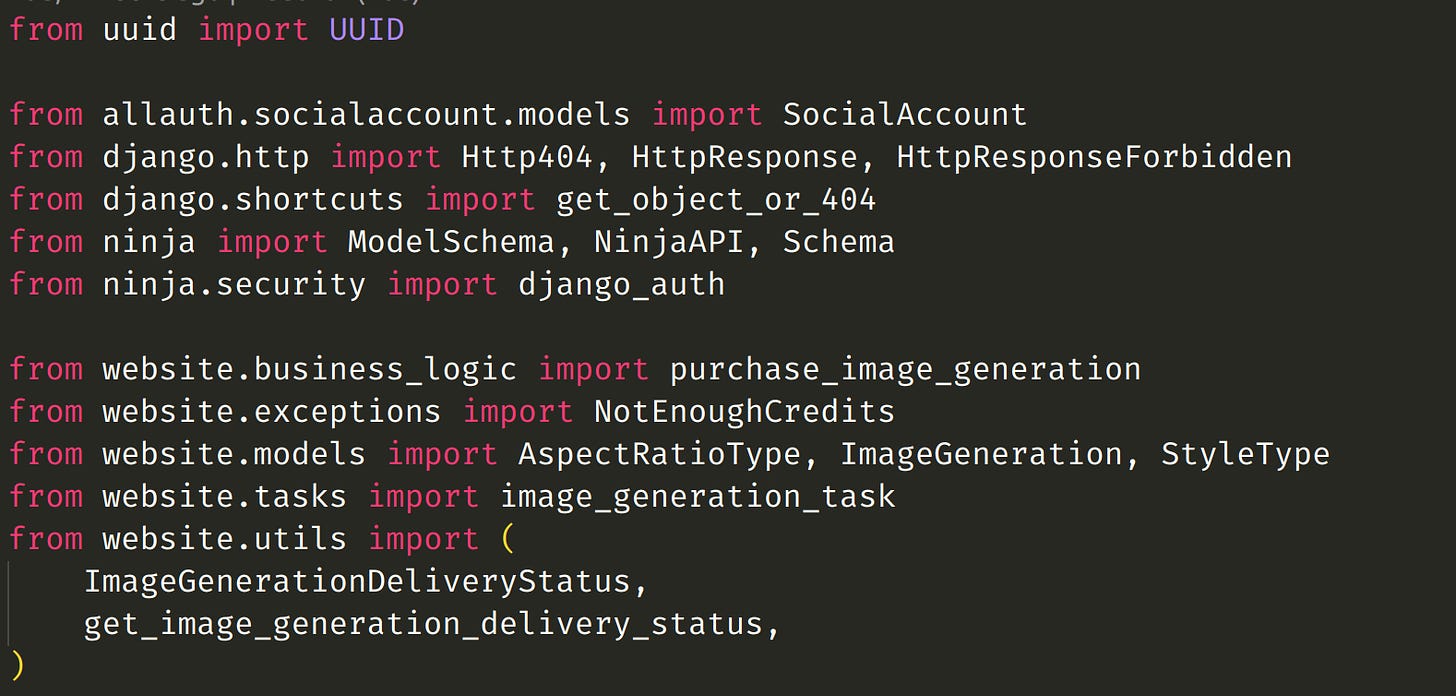

Before black:

After black:

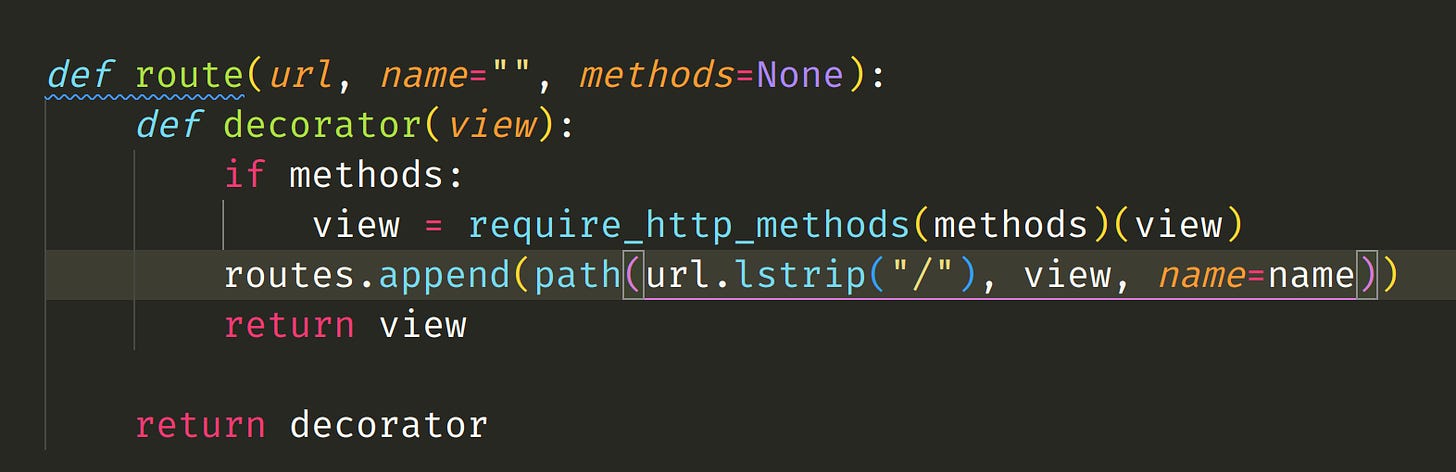

Then you have imports formatters. Those are different, they don't format all the code, but only the imports lines. An import formatter will group them by category, usually stdlib, then 3rd party packages, then your modules.

They turn a messy bush of statements you grow over your various attempts into a neat table of contents.

It's not mandatory, but it's quite nice.

Again, they are several competitors. I keep coming back to isort, because it has a feature that I really like, called float to top. However, if you read this article, it's likely you would better fare with ruff: it's fast, has sane defaults, and also packs a linter.

Before isort:

After isort:

Linters

We are now entering the realm of nice-to-have.

A linter is a program that will read your code, and tell you that you have wasted your life.

More accurately, it will zoom on the pores of the skin of what you wrote and show you all the imperfections: too many variables, this name sounds like a German dish, this attribute doesn't exist, you forgot a comma, you have one too many commas, the comma is at the wrong place, you should apologize to that comma, etc.

Despite how awful that sounds, it's actually useful, especially if you are in a team. It will keep a certain level of quality and consistency across the work of everyone.

Linters are not nice. They can hurt your feeling.

The meanest one is certainly pylint, which will even rate your code on a scale of 10, and sometimes give you a negative rating when it's in a bad mood. Take this 5 lines script:

import requests

from rich.pretty import pprint

resp = requests.get("https://peps.python.org/api/peps.json")

data = resp.json()

pprint([(k, v["title"]) for k, v in data.items()][:10])Here is what happens when you run pylint with the default settings on it:

pylint script.py

************* Module foo

script.py:1:0: C0114: Missing module docstring (missing-module-docstring)

script.py:1:0: C0104: Disallowed name "foo" (disallowed-name)

script.py:12:7: W3101: Missing timeout argument for method 'requests.get' can cause your program to hang indefinitely (missing-timeout)

-----------------------------------

Your code has been rated at 4.00/104 on 10. I'm a bloody Python expert and I'm failing to live up to Pylint expectations with barely more than a “hello world”.

Now, while pylint is definitely my linter of choice for serious projects, I would recommend against it if you want to give a try to the concept. You would hate it. It would hate you. It's no way to start a relationship.

Ruff is a better choice: it has better default settings, focus on more important things out of the box, has less legacy burden. And it also formats imports. Not to mention it's much, much faster.

I would not recommend flake8 or pyflakes, they are quite redundant with ruff or pylint.

On top of that, you may add pydoctstyle if you wish for pedantic reminders that you don't write good enough doc and you should feel bad. It's not a great tool, plenty of bugs and limitations, but if you are writing a library for others, it's better than nothing.

Type checkers

Static type checkers will take code like this:

def if_you_are_grug_and_you_know_it_clap_your(hands: str):

hands.clap()And tell you that you that the type of hands doesn't have the method clap without having to run the program.

script.py:2: error: "str" has no attribute "clap" [attr-defined]

Found 1 error in 1 file (checked 1 source file)You can perfectly develop a big project without any type checkers. Hey, what do you think we did before youtube existed? We read RFC for breakfirst and wrote code with butterflies, evidently.

What's more, Python typing is sometimes exasperating. It's not super ergonomic, and feels bolted on the language.

Nevertheless, there are some projects where you can't afford not having a type checkers. If you work with software dealing with human life, very expensive hardware, or critical processes, it will save you not only from simple bugs now, but also from having other people introducing them in the future.

It's definitely a trade off, though. Your code will take weight, lose flexibility, and you will have to write non-idiomatic things to please your new checker overload.

Still, there are situations where it's worth it. With my current client, we found critical bugs months after the start of the project, despite 95% test coverage, thanks to adding more and more type declarations. We also prevented similar bugs to ever happen later on.

Of course, you could choose alternative type checkers, such as pyright from Microsoft. This is what you get by default when you use visual studio code. But mypy, despite all of its flaws, is what I would advise you to use, simply because you'll get better support and docs on it. And ChatGPT will help you more with it.

Test runner

No matter what everybody tells you, there are a huge quantity of untested code out there. Well, it's tested… but by users… in production. So don't let people tell you that you are not dev if you don't write unit tests. The world runs on buggy software. We should not be proud of it, but ignoring it is silly.

That being said, the more you get experienced, the more you will want tests, and miss them when they are not there. That doesn't make writing tests fun, it's a damn soul sucking experience that I delegate to an LLM (previously called “interns”) as much as I can, but there is a limit to that.

You will have to write tests, if you want them to be good, and to be the right ones. The ones that will be just enough but not too much for your project.

So you better make writing tests easier, and for that, a good test runner is worth every single second spent learning it. In Python, you have, yet again, many of them, such as unittest and nose.

But there is not really a competition, pytest is the best one. It scales down, being super easy to use, but scales up, with mighty features and a rich ecosystem of plugins.

Bottom line, if you want to become serious with Python one day, you will have to learn to use it.

Pytest might not be enough, though. If you make a lib, chances are you will want to run those tests with different versions of Python to be sure it works with all of them. It's not a common use case, but it's a real one. The contenders for this are tox and nox.

Once more I have a preference for one, which is nox. It's using a pure Python syntax for the pipeline configuration instead of a text config file, and given I have a difficult relationship with DSL, I'll stick with that. Both are good, though.

Task runner

While I would advise settings up those tools in your editor, you will need them in the CLI as well. For processing the entire project, to run them on a server, in a CI, or automatically with git hooks.

Not to mention the myriads of other commands you might need for your project, a builder, a packager, dev server for the backend, maybe something for the front...

Now multiply that by many versions and many projects, and you get a hell of a cartesian product of flags and options you will never remember.

This is where a good task runner helps.

I have one task runner (I like doit, and we have a tutorial on it) on each project to abstract all the process.

I don’t run mypy, pylint or ruff, I run "doit check_code". I don’t run black then isort, I run "doit format". I don’t run pytest or tox, I run "doit test".

This way I don't have to remember what to start for which directory and syntax. It's normalized. And I can read the dodo.py file for a refresher of what the project use.

This reduces the cost of context switching.

Tips:

All those tools are great, but don't over-complicate your stack. You must always feel a sense of ease using it, minus the occasional learning of a new tool. If you spend too much time worrying about your pen and paper instead of writing a novel, you are not much of an author.

You don't need to use everything, everywhere, all at once. Pick one, give it a try, pick another one, etc. Until that sticks. Then chose. Not every project needs a type checker, most projects don't need tox, etc.

Introduce them gradually. Master one before using another one. They should help you, not slow you down.

Put as much config as possible into pyproject.toml. A lot of configurations tools will happily read from it, and it will give you one source of truth.

Coding with butterflies is not as fun as it seems, so have a default config that includes a few basic tools in your editor setup by default. It should be activated even if you are not in a project and edit a single file. You never know when 5 lines will turn into 5000.

Thanks! I've been recently acquainted with your blog and I have to say I enjoy doing the reads and learn a lot. Many thanks Bite Code! Just one question if I may. I read in another article of yours not to install anything outside a virtual environment, does that rule apply to Nox? I've read that it is supposed to be installed globally, so I just wonder if it could work just fine inside a virtual environment or if there is any advantage by installing it globally. Thanks in advance!

A nice alternative to doit is pre-commit (https://pre-commit.com). Its predicated on using Git to version control your project since it uses a pre-commit hook, but there are a vast array of hooks available for pre-commit for pylint, ruff, isort, nbstrip (to remove executed cells from Jupyter Notebooks that are under version control) and many, many more.

Its super useful. I've written about it https://ns-rse.github.io/posts/pre-commit/