What's the deal with setuptools, setup.py, pyproject.toml and wheels?

Why? Why? Why? Oh, that's why.

Summary

There are 3 sides to this story:

Building a package.

Installing a package.

Configuring tooling for a project.

setup.py is a file that was used both for building and installing a package in the past. You'd call python setup.py build to create a tar.gz or egg, and pip install would run setup.py to install them.

The latter is much rarer today because the Python community moved to a static package format called wheels. Those wheels don't need to execute code for installation, and so don't ship a setup.py file. When you pip install something in a venv in 2024, it usually transparently downloads and unpacks the wheel without the user having to know or do anything, even for complex compiled extensions like pandas.

However, projects that are old enough may still have a setup.py file for the author of the project, used to build the package. Users don't have to know about it, only the dev.

This is also getting rarer and rarer. Projects are increasingly using more and more pyproject.toml to hold the project metadata, define the build process, and even configure all the tooling needed such as linters, test runners or type checkers.

And if you wonder what the hell is setuptools, distutils, setup.cfg and how we got there, you can read on.

It was a land before time

pyproject.toml has become a God file in the Python world, like a package.json that invited the whole neighborhood to the party, and now nobody wants to leave. So what is really pyproject.toml? What should you put in there? And how did we get there?

Well, at the risk of sounding like a broken record, Python is... old.

1991 type of old. At this time, you didn't have to care about emoji support in text so you could use bytes and strings interchangeably. CPU didn't have multiple cores so the GIL seemed a great idea. Installing a package was basically copy/pasting the code in the current directory.

What's amazing is that it still all works. You can match a regex on bytes, update a list from multiple threads without crashing, or dump a pure Python package into your project then import it like it's part of your code.

It's trendy to hate on those characteristics nowadays, but I dare you to find many decisions you took 30 years ago that are still holding as well today. If you even were born then.

But they do come with a ton of baggage, and packaging is at the top of everyone's pain list, right there with free multi-threading.

Let's go back in time to understand the whole shenanigans. Once you get the full picture, it will be easy to know what to keep and what to throw away.

On the first day, there were source packages

Because of the way the Python import system works (another design decision that shows its age), any module or package in the Python Path is importable.

So for a long time, sharing code was basically using dumb archives, mostly tar.gz and .zip files, and decompressing them inside the Python installation directory, in something like site-packages. In fact, since V2.3 you don’t even need to open them, Python can import a zip directly. Yep.

But you did have to build this archive, define some metadata for it, and on installation, copy the files on the Python Path.

On top of that, some packages already came with compiled extensions, and package authors had to build .exe for some platforms, while package users had to compile source files on their computers.

The machinery responsible for automatizing all that was centralized into a library called distutils. And because not much was standardized at the time, you kinda defined the whole logic in a file on a per-package basis. This file was traditionally named setup.py, and contained whatever arbitrary code that the devs needed to duck-tape the package installation to work. To give you a taste, here is what the PyGresQL setup.py file looked like in 2006:

#!/usr/bin/env python

# $Id: setup.py,v 1.22 2006/05/31 00:04:01 cito Exp $

"""Setup script for PyGreSQL version 3.8.1

Authors and history:

* PyGreSQL written 1997 by D'Arcy J.M. Cain <darcy@druid.net>

* based on code written 1995 by Pascal Andre <andre@chimay.via.ecp.fr>

* setup script created 2000/04 Mark Alexander <mwa@gate.net>

* tweaked 2000/05 Jeremy Hylton <jeremy@cnri.reston.va.us>

* win32 support 2001/01 by Gerhard Haering <gerhard@bigfoot.de>

* tweaked 2006/02 Christoph Zwerschke <cito@online.de>

Prerequisites to be installed:

* Python including devel package (header files and distutils)

* PostgreSQL libs and devel packages (header files of client and server)

* PostgreSQL pg_config tool (usually included in the devel package)

(the Windows installer has it as part of the database server feature)

Tested with Python 2.4.3 and PostGreSQL 8.1.4. Older version should work

as well, but you will need at least Python 2.1 and PostgreSQL 7.1.3.

Use as follows:

python setup.py build # to build the module

python setup.py install # to install it

For Win32, you should have the Microsoft Visual C++ compiler and

the Microsoft .NET Framework SDK installed and on your search path.

If you want to use the free Microsoft Visual C++ Toolkit 2003 compiler,

you need to patch distutils (www.vrplumber.com/programming/mstoolkit/).

Alternatively, you can use MinGW (www.mingw.org) for building on Win32:

python setup.py build -c mingw32 install # use MinGW

Note that the official Python distribution is now using msvcr71 instead

of msvcrt as its common runtime library. So, if you are using MinGW to build

PyGreSQL, you should edit the file "%MinGWpath%/lib/gcc/%MinGWversion%/specs"

and change the entry that reads -lmsvcrt to -lmsvcr71.

See www.python.org/doc/current/inst/ for more information

on using distutils to install Python programs.

"""

from distutils.core import setup

from distutils.extension import Extension

import sys, os

def pg_config(s):

"""Retrieve information about installed version of PostgreSQL."""

f = os.popen("pg_config --%s" % s)

d = f.readline().strip()

if f.close() is not None:

raise Exception, "pg_config tool is not available."

if not d:

raise Exception, "Could not get %s information." % s

return d

def mk_include():

"""Create a temporary local include directory.

The directory will contain a copy of the PostgreSQL server header files,

where all features which are not necessary for PyGreSQL are disabled.

"""

os.mkdir('include')

for f in os.listdir(pg_include_dir_server):

if not f.endswith('.h'):

continue

d = file(os.path.join(pg_include_dir_server, f)).read()

if f == 'pg_config.h':

d += '\n'

d += '#undef ENABLE_NLS\n'

d += '#undef USE_REPL_SNPRINTF\n'

d += '#undef USE_SSL\n'

file(os.path.join('include', f), 'w').write(d)

def rm_include():

"""Remove the temporary local include directory."""

if os.path.exists('include'):

for f in os.listdir('include'):

os.remove(os.path.join('include', f))

os.rmdir('include')

pg_include_dir = pg_config('includedir')

pg_include_dir_server = pg_config('includedir-server')

rm_include()

mk_include()

include_dirs = ['include', pg_include_dir, pg_include_dir_server]

pg_libdir = pg_config('libdir')

library_dirs = [pg_libdir]

libraries=['pq']

if sys.platform == "win32":

include_dirs.append(os.path.join(pg_include_dir_server, 'port/win32'))

setup(

name = "PyGreSQL",

version = "3.8.1",

description = "Python PostgreSQL Interfaces",

author = "D'Arcy J. M. Cain",

author_email = "darcy@PyGreSQL.org",

url = "http://www.pygresql.org",

license = "Python",

py_modules = ['pg', 'pgdb'],

ext_modules = [Extension(

'_pg', ['pgmodule.c'],

include_dirs = include_dirs,

library_dirs = library_dirs,

libraries = libraries,

extra_compile_args = ['-O2'],

)],

)

rm_include()

Even if distutils provided some common tooling, each package manager was more or less recoding half of a build system. Also, there was no reliable dependency management.

This became a problem because...

On the second day, there were package repositories

Because of the success of the visionary Comprehensive Perl Archive Network, people started to long for something similar for Python, and so was born the Cheese Shop. The original name was a reference to a Monty Python sketch, but you know it today as Pypi, and it became the main repository of Python packages for the world to share.

I know it's a bit weird when you live in a world where you can npm/pip/gem install anything, but at the time you had to download the package you wanted manually, and then run python setup.py install and pray for it to not destroy your machine.

And if you think it's bad, the JS/PHP/Ruby ecosystem had it way, way worse, believe it or not. That is literally how I started to use Python: because I tried PHP, Ruby and JS, and installing 3rd party deps was a miserable experience. PHP and JS were still at the copy/paste everything manually stage (which partially explains the success of universal deps like jQuery), and ruby lib installs crashed more often than today's Boeing planes.

Oh, the irony!

Of course, people didn't want to do that dance forever, and the setuptools project was born: a library to replace (yet ship) distutils that would come with standardized dependency management, a new format for binary distributions with the extension .eggs and the ancestor to pip, the easy_install command.

It was better in every way: you could just easy_install package to fetch something from pypi, and it would resolve the dependencies and download them for you. Something you might take for granted, but was far from a given at the time. Instead of just having tar.gz files, authors could also provide pre-compile .eggs files, so you didn't have to build the code for c-extensions on your machine.

If you wonder why Python is everywhere in the Scientific and AI community, that's because of this: it tackled the hard problem of shipping binary packages quite early and never stopped improving the process. To this day, no other scripting language has a better story for it.

Nevertheless, because everybody was trying to figure things out, all of those attempts were deeply flawed:

You still had to execute arbitrary Python code to install packages.

setup.pywas still there, but it importedsetuptoolsinstead ofdistutils.easy_installdependency resolution was limited (E.G: no backtracking, no conflict resolution), the tool failed silently and didn't provide uninstallation (!)..eggswere hard to produce and maintain. And there was no standard way to indicate what platform they were compatible with.There was no isolation mechanism, so you would eventually destroy your Python installation after installing so many things on top of each other.

The ecosystem experimented with a lot of things. The zope folks tried to over-engineer something as usual and attempted to conquer the world with buildout and paste. Thank God they failed.

There was the great chiasm when the distutils team came out with distutils2 and we didn't know what to use anymore, and then it was merged back to setuptools. Then some talks about setuptools2 that never really translated to something.

It was a confusing time.

Thankfully, the community converged to what we have today.

On the third day came pip, venv and wheels

When you use cargo, going back to pip may hurt your soul a little, but when it came out, it was a revolution.

Better dependency resolution, uninstallation, retry mechanism, pinned dependencies, the ability to list everything you installed, share it, and reinstall it again!

But above all, pip was natively aware of virtual environments. Indeed, a smart fellow named Ian Bicking had created a new way to isolate all those installations: the virtualenv tool. It was a beautiful solution: you create separate envs for each project, and suddenly, you can't break the whole world anymore and can mess around with 3rd party libs to your heart's content!

Again today, Python is criticized for the venv system. Why not load something like node_modules automatically? There is a lot to say about that, and that could fill an entire article, so I'll skip it for now. The point is, venvs were a significant improvement.

This also had the side effect of streamlining a lot of workflows, opening the door to the Cambrian explosion of alternative tools such as virtualenvwrapper, pew, pipx, pipenv, poetry, pyenv, etc., all experimenting with new ways to install Python stuff.

pip got eventually included with Python, and when it could not be (E.G: when linux distro managers sabotaged the Python packaging ecosystem, with the best intentions in mind. Maybe I'll write about that one day), a script named ensurepip was provided to, well, ensure that pip is available eventually by downloading and installing it. Something similar to virtualenv, the venv command, was also finally added to Python standard toolkit.

To this day, the duo pip / venv is the only thing that has truly won the Python packaging lottery. All the other projects are still fighting for user shares, although I do have my money on Astral providing the next evolutionary leap.

But there was still one elephant in the room: compiled extensions.

Say cheese again

Despite a long history of shipping blobs of Pascal, C, C++, and assembly to number lovers all over the planet, Python had a problem: it was a bloody pain.

Less of a pain than with any other non-compiled alternative, mind you.

But a pain nonetheless.

If you remember trying to install Numeric, the father of numpy, you know what I mean.

And a smart company named Continuum decided to make a business out of it, creating the famous Anaconda, a Python distribution that did all the hard work of compiling this mess for you.

Anaconda was a savior for the scientific community. Installing Frankenstein deps worked out of the box, and in fact, you often didn't have to install anything because it came with a lot of what you needed already pre-installed.

You did have to pay a little price: live in the anaconda bubble, isolated from anything else, because if you mixed pypi repos with conda channels, bad things would happen.

It was a fair compromise for a long time.

Then at the end of summer 2012, PEP 427 introduced a new package format for Python: the wheels (because wheel of cheese, get it?).

The wheels where .whl files designed with several goals in mind:

Being completely static, so pip doesn't have to run code from a

setup.pyfile to install a package.Support both pure Python and binary code so that we have one true universal format to install anything.

Having extended metadata so that you can know what platform a particular build can run on. With later on, the support of the

manylinuxtag that removed the need for source distribution across the most popular distros.

It took a while to take off, but wheels have today annihilated 80% of the packaging problems that plagued Python for so long, provided you actually use them. I’m serious. It’s that much of a difference.

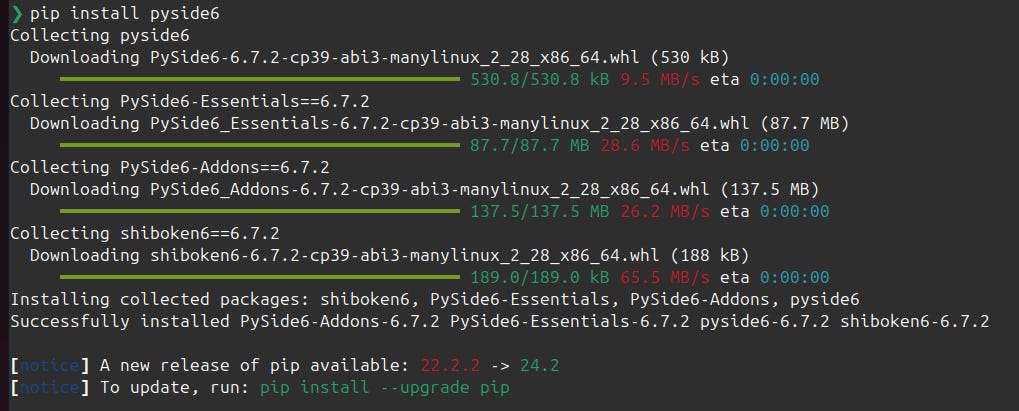

pip install things like scipy or pyside6 on ubuntu work out of the box in a venv, no matter how many dragons those damn packages have in there.

That’s why I say today most “packaging problems” are actually bootstrapping problems. With imports and versioning sprinkled on top.

Today, if you install a package with pip in a venv, it's probably a wheel, and you don't even know it. You don’t have to do anything about it.

Anaconda is now more a source of problems than solutions, and Continuum provides better value with managed deps and cloud solutions it sells to corporations. If you have the choice though, don’t use it.

Toward pyproject.toml

This fixed a lot of things for loads of users. Indeed, most coders use packages but don’t make their own.

Yet, as wheels have removed the need to have setup.py files sent to the end user, many projects still had them in their repo. Why?

Because lib authors still needed to create said wheels, and for some time, setuptools + wheel + twine was the combo to do it.

So while it was better to not ship executable code, we still had to solve the problem of having build metadata that could be parsed in a standard way.

The team at setuptools made an attempt with setup.cfg, an INI file version of setup.py that you could parse without having to run arbitrary code, with fancy features like loading the version from a Python modules:

version = attr: the_package_name.__version__To my disappointment, it never took off.

But in 2016, a much more minimalist format timidly appeared as "PEP 518 – Specifying Minimum Build System Requirements for Python Projects". The goal was to have one format to configure which build tool you wanted to use for your project. By default, it would allow setuptools, of course, but you could choose something else, opening the door to alternatives, allowing poetry, flint and hatch to eventually flourish.

It was a smart move: instead of trying to define a standard for building things that would eventually be proven insufficient, make the smallest change possible, and allow the community to play with it until a good solution emerges.

As one of the authors, Brett Cannon, repeatedly told me despite my doubts, it was not meant to replace setup.py. Just a simple, extensible file format to foster a thriving build ecosystem. And who knows, maybe one day pick the most popular tool, and integrate that into Python. Worked for pip and venv, after all.

Predictably, it didn't go that way.

pyproject.toml proved to be an immense success for everything. It was standard, it was clean, it was extensible, and it was there. It became quickly a setup.py replacement for every single build tool under the sun. Then also the place where all project management tools, from linters to task runners, would put their configuration.

pyproject.toml turned into the central pillar of Python project management. Even projects NOT using it for building anything used it for configuration.

4 years later, this reality sank in, and PEP 621 extended officially pyproject.toml to provide things like dependencies, version, description, and so on.

Today, pyproject.toml is simply the standard to declare the basic information about your package. But even if you don't plan to make a package, you likely want one anyway, because mypy, uv, black or pytest all can store their config in it, and it's much better to have that in a single file than in multiple ones with different formats scattered in your project.

Here is a pyproject.toml on a side-project I used recently. You can see it packs a little bit of everything:

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

[project]

name = "backend"

dynamic = ["version"]

description = ''

readme = "README.md"

requires-python = "~=3.11.3"

license = "MIT"

keywords = []

authors = [{ name = "BTCode", email = "contact@bitecode.dev" }]

dependencies = [

"django",

"django-ninja",

"django-extensions",

"uuid6",

"pyotp",

"playwright",

# requires "playwright install chrome" after

"django-huey",

"sentry-sdk[django]",

"logfire[django]",

"atproto",

"urllib3",

"Mastodon.py",

"environs",

"linkedin",

]

[tool.hatch.envs.default]

dependencies = ["ruff", "pytest", "pytest-django", "watchdog", "uv"]

installer = "uv"

path = ".venv"

[tool.hatch.envs.prod]

installer = "uv"

path = ".venv_prod"

type = "pip-compile"

requires = ["hatch-pip-compile"]

pip-compile-hashes = true

lock-filename = "backend/requirements.txt"

pip-compile-installer = "uv"

[tool.hatch.build.targets.wheel]

packages = ["backend/api"]

[tool.hatch.version]

path = "backend/api/__about__.py"

[tool.coverage.run]

source_pkgs = ["api", "tests"]

branch = true

parallel = true

omit = ["api/__about__.py"]

[tool.coverage.paths]

backend = ["backend/api", "*/backend//api"]

tests = ["*/api/tests"]

[tool.coverage.report]

exclude_lines = ["no cov", "if __name__ == .__main__.:", "if TYPE_CHECKING:"]

[tool.pytest.ini_options]

DJANGO_SETTINGS_MODULE = "project.settings_local"

python_files = ["tests.py", "test_*.py", "*_tests.py"]This leaves us in a weird situation though: Python comes with zero blessed tool to build a package that is installed by default on all major platforms. Even setuptools, which is increasingly being replaced by more modern 3rd party alternatives, needs to be explicitly installed in Linux. Bottom line, if you want to publish a lib, you have to first pick a tool. A hard decision to make in such a big ecosystem.

But at least you know you can configure it all in pyproject.toml.

At this stage, I hope you are starting to appreciate the journey the Python community went through to reach where we are today.

Make no mistake, every single popular language, no matter how modern they look today, will have to go through that road as well as the world evolves and they need to deal with their legacy decisions without breaking half the existing code bases.

That doesn't make it easier on you when you have to pay the consequences in your day-to-day coding life, I get it.

But maybe, just maybe, you'll be more lenient on the huge chain of people, who, for decades, have been working hard to ensure the open-source bazaar keeps functioning.

The curse of diversity

In 2024, it's fashionable to toot diversity as only a good thing, something you should definitely strive for, especially in FOSS. Yet if you tried to charge your mobile phone in 2010, juggling the bazillion formats for plugs each brand decided to patent, it was clear that normalization had a few perks.

As usual, it's a matter of balance. Diversity will foster innovation and flexibility, while uniformity will make for easier collaboration and remove friction.

On the other hand, in a standardized world, not fitting in comes with a higher cost for just existing. Took me a couple of decades to figure out this neuro-divergent thing.

And in a diverse world, not all differences will come in a package of your liking. Next time you meet an idiot, remember he may have a gene resistant to a disease and biological diversity contributes to humanity's resilience.

In the context of Python packaging, we have a huge diversity. There are so many legacy tools, and so many attempts to solve the lack of standards with yet a new standard. Python contributors realized they couldn’t make future-proof decisions and delegated the task to the community, which was very happy to oblige and expressed its own diversity through a great surge of productive creativity.

It’s the reason the languages stayed relevant for so long, as a small open-source project used in so many different ways. Yes, until circa 2015, Python was not that big.

The other side of the coin is that between pyenv, venv, virtualenv, pip, anaconda, poetry, pipenv, uv, pipx, hatch, setuptools and so much more , users are completely lost. A huge amount of productivity disappears daily in efforts to navigate this labyrinth.

That's why I advocate to start by focusing on the basics. At least for a few years.

Following the recipe from "Relieving your packaging pain" to bootstrap Python's projects. Only deviating from that once one is very comfortable with it.

On top of this, use pyproject.toml for everything you can. At the minimum for linters, formaters or test runners configuration. It's standard, tools know it, people expect it, and the TOML format is the least of all evils. Slash complexity, limit divergence, centralize.

Forget about setup.py, setup.cfg or eggs. They still work, but they are legacy tech.

If you build and distribute your own packages (which, remember, few people do), you may use setuptools in pyproject.toml, but it's only one build tool option among many. If you create wheels for your users, it doesn't matter to them anymore. They don't have to know what build tool you use when they install your code, unlike it used to be with setup.py. It's transparent.

The choice of the build tool for a package you create is mostly a matter of taste, as only the author needs to install it during dev. Almost all tools can build wheels anyway, and wheels are what most people need.

setuptools does have one advantage: it’s already there in Windows and Mac.

Don't jump on the new packaging trend either. As you have noticed, the community is keen to experiment a lot. That's how we get better stuff. But the alternative branches don't get pruned to eventually merge back to the glorious sacred timeline. It takes time to get used to the multiverse. Practice selective ignorance.

I'll have to write a series on how to create packages, won't I?

Thanks for another great article! My RSS-Reader makes me smile every time a new bite code! article pops up. Entertaining writing style and there is a bunch of stuff to learn from every article for an intermediate pythonista like me.

>>> I'll have to write a series on how to create packages, won't I?

Looking forward to that!