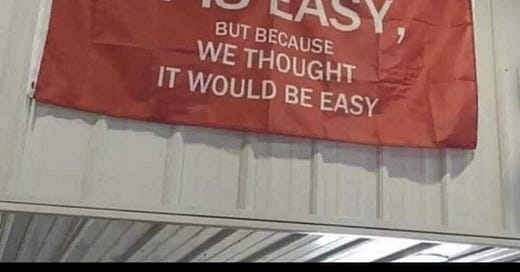

Summary

What could be easier than doing an HTTP POST?

This PR will be merged by lunch!

I just have to deal with the client's corporate setup.

And put that in a thread. With a queue. And deal with the network unreliability. Potentially my errors. Also back pressure. Plus resource allocation.

I mean, I didn't plan, plan.

But I'm an engineer, I got this.

Let's go. In and out, 20 minutes adventure.

My client just told me they had a log aggregation system with an HTTP endpoint ready to be used for the product I was making for them.

Sweet, I thought. Nobody wants to debug this service by digging log files out of their kub pod. Structlog has a very flexible processor chain, let's add some HTTP transport for our log messages.

When we log a message, do an HTTP POST, it shouldn't be that hard.

Let's do it right

The network is slow, so making HTTP calls in the middle of our calculation would likely slow down our own user requests to a crawl. But this part of the project is doing mostly long numpy processing, so asyncio is not a good choice.

Since both numpy and requests release the GIL when they take the most time, this is the perfect use case for threads.

It doesn't have to be hard, thanks to the excellent ThreadExecutor, I get a worker queue for free, which I can just feed log messages and be happy.

This operation cannot possibly fail.

Right, it's a corporate client

So, of course, there is a proxy to setup, since the whole network is filtered. And there is a self-signed certificate for good measure on the other side of the wire. No, they don't have a way to provide that.

But that's alright. That's what Python is good at.

Let's load wincertstore, lookup the one we need that has been provisioned with the machine, and ship that.

There also is the little thing about registering on their API getaway, so a few dozens more lines of code.

We are not going to let small details like that slow us down.

Right, it's a corporate tool

So the log aggregation system has some peculiar features:

It answers a 404 if your payload contains data it doesn't expect.

It expects a JSON payload, but which must contain a certain key. The value of this key must be a string... in JSON. Double JSON encoding. You never know.

Despite being on VPN, using certificates and going through an API gateway, I still need to provide an auth token, because security.

I have to manually specific what bucket I log into. I can log in others' team bucket if I get it wrong.

Keeps me young.

Of course the network can have problems...

Welp, it's the network, dummy. It's full of gremlins that eat the cables, minor divinities playing ineffable plans with the container clusters and very confused puny humans everywhere in the graph.

So you better deal with that.

I have to retry when some stuff fails. But not all stuff, only retrievable stuff. Transient errors.

And I can retry too many times, or for too long. That requires several timeouts and a counter.

Also I probably don't want to hammer the aggregation system, it's good taste to add exponential back off for that. Plus a little bit of jitter, so that all instances don't do that exactly at the same time. Tenacity is good for this.

It's a bit of work, and damn annoying to test. Side effects everywhere.

I hope you like mocks.

Still, I love my job. Those little challenges make life interesting.

Thou shalt love thy colleague as thyself

"What happens if the aggregation service is down for the day?", asked my nontechnical yet pretty smart colleague.

"Well", I answer with glowing confidence, "Each message retries for a few times, then is discarded, and the next in the queue is attempted."

"Wouldn't that mean the queue would get full?", he innocently replied

"No, it's an unlimited queue, it will keep all the messages and... Shit."

The queue might not be limited, but the laws of physics still apply, and the machine it runs on has a very real physically limit in RAM.

This is starting to look like a long day of work.

Monkey see, monkey patch

ThreadExecutor has no built-in way to limit the size of the queue. It uses SimpleQueue, which is, well, simple.

Now, I can technically override the private attribute that holds it and use a Queue. Setting maxsize to a reasonable limit, and checking if the queue is full before adding a new message, popping the last message and adding the new one.

That's possible. We would lose some messages, but there are still in the log file in case this very rare situation happens, and we don't cause the violent death of our node.

Sure you gotta test that, write biblical comment sections and justify it during a code review, but that's the job.

Let's get it done, I'm getting tired.

Operation cannot possibly fail, a second time

You know what happens when you exit the Python interpreter with a ThreadExecutor queue full of messages?

It waits. It waits until all the messages are consumed. Now if you got hundreds of unused HTTP messages with your genius idea of retry + back off + jitter, you got a long wait ahead of you.

You have to drain them.

You can run shutdown and cancel all futures, so that you wait only for the last messages being processed. Thanks to inversion of control, we don't have access to the program entry points, so we can't do that with a context manager.

This is what atexit is good for.

Did I mention this HTTP transport was a stupid idea?

I'll do my own error handling, with blackjack and excepthook

Until now, nothing had gone wrong.

Because you see, all this is what happens when our program doesn't go wrong.

However, logging is at its most useful when a program does.

Having a trace of the stack trace when the race is over is nicer than tracing. Repeat this sentence 5 times very fast.

I already wrote about excepthook, so you know the drill: we get the old one, we attach the new one, we call the old one.

This does put us in a conundrum. Put the message in a queue that will be drained? But the queue pushing callback is already attached to the logger.

At this stage, I converted to 7 different religions in the last 2 hours, including pastafarisme, so I just swapped the ThreadExecutor.submit method at run-time, with a synchronous one that does the HTTP call directly.

Ramen.