Testing with Python (part 9): the extra mile

We almost look professional now

Summary

You can get test coverage of your code base by using pytest-cov:

pytest --cov=my_awesome_package --cov-report=htmlThis will show you what lines are not executed by your tests:

And you can test with huge matrix of contexts of different version of Python and libs using tools like nox:

import nox

@nox.session(python=["3.8", "3.9", "3.10"])

def tests(session):

session.install("pytest")

session.run("pytest")So that you don't just test your code, but also how it integrates with the entire ecosystem.

Better, faster, stronger

You can always test more. Deeper, with additional contexts. There is no limit to testing except the laws of physics and how much one can pay for it.

After reading this series, especially the last article, you should have a good idea of all the variables you want to take into consideration to move the cursor of investment in testing for each project.

This cursor, evidently, is not fixed. It changes from codebase to codebase. It also changes during the life of a codebase.

And today we are going to cover what you can do to move it further to the right of the gauge.

Coverage

Coverage is a metric that tells you what lines of your code were executed by your tests. It's not good at telling you how well-tested your code is, but it's useful to highlight where you are lacking tests.

In Python, pytest comes with a great plugin ecosystem, and of course, it includes all that you need to do coverage with pytest-cov.

Let's go back to the example we used in part 3 that looked like this:

less_basic_project

├── my_awesome_package

│ ├── calculation_engine.py

│ ├── __init__.py

│ ├── permissions.py

├── pyproject.toml

└── tests

├── conftest.py

├── test_calculation_engine.py

└── test_permissions.pyHow many of our my_awesome_package code is actually called by our tests?

First we pip install pytest-cov, then we can now run the tests with coverage:

pytest --cov=my_awesome_package --cov-report=term(make sure you don't have --no-summary configured in pyproject.toml, or the report will be stripped)

This gives us:

tests/test_calculation_engine.py

This is run before each test

.

We tested with 2

This is run after each test

This is run before each test

.

This is run after each test

..

tests/test_permissions.py .

---------- coverage: platform linux, python 3.10.14-final-0 ----------

Name Stmts Miss Cover

--------------------------------------------------------------

my_awesome_package/__init__.py 0 0 100%

my_awesome_package/calculation_engine.py 4 1 100%

my_awesome_package/permissions.py 2 0 100%

--------------------------------------------------------------

TOTAL 6 1 100%

We can see we cover all our lines of code (100%).

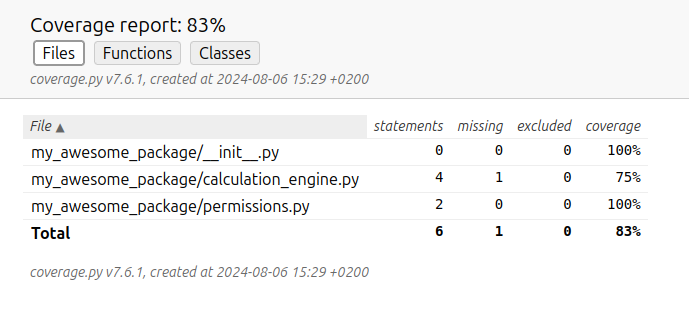

Alternatively, we can produce a web report by calling:

pytest --cov=my_awesome_package --cov-report=htmlThis generates an HTML static site in the directory htmlcov. If we open the index.html file with a web browser, we can see the same data, but each line is clickable to dig deeper into the code base stats:

And indeed, if we modify the function in calculation_engine.py from:

def add(a, b):

return a + bTo:

def add(a, b):

if a == 666:

raise ValueError('Unexpected evil in computation. Please reboot.')

return a + bThen run it again, and the code coverage falls to 83% (because we have very little code in total, our one-line change seems very big):

If I click on calculation_engine.py, I can see the impacted lines:

The line in red is never executed, and I could add a new test to make sure this specific if branch is exercised once.

A bit of warning: coverage is easily abused.

First, it's easy to write terrible tests but that still gets high coverage, since it just checks lines were executed, not that the code behaves as it should. So you should not rely on coverage to demonstrate quality, only to find holes.

Second, it's tempting to require a very high coverage right off the bat. But unless the project you are working on warrants it, it will slow down development a lot.

That's because the first % of coverage are low-hanging fruits, but the last % require a lot of work compared to what they bring to the table. Given at the start of a project you refactor regularly and delete tests, an expensive test to write is a costly investment.

With this in mind, you can enforce a minimal required coverage with --cov-fail-under:

pytest --cov=my_awesome_package --cov-report=html --cov-fail-under=85

... # usual report

FAIL Required test coverage of 85% not reached. Total coverage: 83.33%This prints an error and sets the shell return code to 1, which is very useful in a CI context.

If I configure coverage and my project is not super sensitive (most aren’t, and despite what the clients tell you, they don’t want to pay for the poney they think they need), I set the coverage minimal limit between 75% and 95% depending on the team. Then I regularly increase 1% as the project matures.

There are very, very few projects where I set it to 100%, although it happens. 95% is usually the sweet spot.

Testing several versions of something

Some projects will need to be compatible with several different Python VM. It can be different versions, like 3.8, 3.9, 3.10, and so on. Or even different implementations, like cPython, Pypy, and uPython.

Since doing that manually would be a chore, the community came out with many ways to do this, such as tox or hatch.

I personally have a preference for nox, because it uses Python instead of a DLS in a config file.

To use nox, make sure that:

You have one "main" venv you use for your project in which you install all your tooling. Make it use the most common and recent Python version you support.

Activate the venv, and

pip install noxin it. Only use nox from that venv. Never outside of it. Nor from other venv. Don't use pipx nor install it globally like the doc says. Remember our mantra.Ensure you have each version of Python you wish to test your project with already installed on this machine. Unlike Hatch, nox doesn't install any Python for you, it expects them to be there already.

Now, for our project, I'm going to revert the add() function to how it was:

def add(a, b):

# if a == 666:

# raise ValueError("Unexpected evil in computation. Please reboot.")

return a + bThen I create a noxfile.py file at the root of the project (so next to the source code and the tests, not in them), containing:

import nox

@nox.session(python=["3.8", "3.9", "3.10"]) # I have those installed

def tests(session):

session.install("pytest", "pytest-cov")

# you can install all your deps here with:

# session.install('-r', 'requirements.txt')

# You can run any command you want here, it's not limited

# to running pytest

session.run("pytest", "--cov=my_awesome_package", "--cov-fail-under=85")

Be careful to not introduce any extraneous spaces, nox really doesn't like that. E.G: "pytest " would fail.

Then we run just run nox:

❯ nox

nox > Running session tests-3.8

nox > Creating virtual environment (virtualenv) using python3.8 in .nox/tests-3-8

nox > python -m pip install pytest pytest-cov

nox > pytest --cov=my_awesome_package --cov-fail-under=85

=============================================================== test session starts ================================================================

collected 5 items

tests/test_calculation_engine.py

This is run before each test

.

We tested with 1

This is run after each test

This is run before each test

.

This is run after each test

..

tests/test_permissions.py .

---------- coverage: platform linux, python 3.8.19-final-0 -----------

Name Stmts Miss Cover

--------------------------------------------------------------

my_awesome_package/__init__.py 0 0 100%

my_awesome_package/calculation_engine.py 2 0 100%

my_awesome_package/permissions.py 2 0 100%

--------------------------------------------------------------

TOTAL 4 0 100%

Required test coverage of 85% reached. Total coverage: 100.00%

================================================================ 5 passed in 0.02s =================================================================

nox > Session tests-3.8 was successful.

nox > Running session tests-3.9

nox > Creating virtual environment (virtualenv) using python3.9 in .nox/tests-3-9

nox > python -m pip install pytest pytest-cov

nox > pytest --cov=my_awesome_package --cov-fail-under=85

=============================================================== test session starts ================================================================

collected 5 items

tests/test_calculation_engine.py

This is run before each test

.

We tested with 2

This is run after each test

This is run before each test

.

This is run after each test

..

tests/test_permissions.py .

---------- coverage: platform linux, python 3.9.19-final-0 -----------

Name Stmts Miss Cover

--------------------------------------------------------------

my_awesome_package/__init__.py 0 0 100%

my_awesome_package/calculation_engine.py 2 0 100%

my_awesome_package/permissions.py 2 0 100%

--------------------------------------------------------------

TOTAL 4 0 100%

Required test coverage of 85% reached. Total coverage: 100.00%

================================================================ 5 passed in 0.02s =================================================================

nox > Session tests-3.9 was successful.

nox > Running session tests-3.10

nox > Creating virtual environment (virtualenv) using python3.10 in .nox/tests-3-10

nox > python -m pip install pytest pytest-cov

nox > pytest --cov=my_awesome_package --cov-fail-under=85

=============================================================== test session starts ================================================================

collected 5 items

tests/test_calculation_engine.py

This is run before each test

.

We tested with 10

This is run after each test

This is run before each test

.

This is run after each test

..

tests/test_permissions.py .

---------- coverage: platform linux, python 3.10.14-final-0 ----------

Name Stmts Miss Cover

--------------------------------------------------------------

my_awesome_package/__init__.py 0 0 100%

my_awesome_package/calculation_engine.py 2 0 100%

my_awesome_package/permissions.py 2 0 100%

--------------------------------------------------------------

TOTAL 4 0 100%

Required test coverage of 85% reached. Total coverage: 100.00%

================================================================ 5 passed in 0.02s =================================================================

nox > Session tests-3.10 was successful.

nox > Ran multiple sessions:

nox > * tests-3.8: success

nox > * tests-3.9: success

nox > * tests-3.10: successSo in essence, nox is kind of a task runner (it can, in fact, let you define cmd line params to pass), but that will execute the same task several times with different versions of Python.

It's not limited to versions of Python, you can choose to test different versions of your libs. Let's say we depend on requests, but we want to test several versions of it and we support several databases:

import nox

@nox.session(python=["3.8", "3.9", "3.10"])

@nox.parametrize('requests_version', ['2.29', '2.30', '2.31'])

@nox.parametrize('database', ['postgres', 'mysql', "sqlite3"])

def tests(session, requests_version, database): # values passed as param

session.install("pytest", "pytest-cov")

session.install(f"requests=={requests_version}")

if database == "postgres":

session.install("psycopg2-binary")

if database == "mysql":

session.install("mysql-connector-python")

session.run("pytest", "--cov=my_awesome_package", "--cov-fail-under=85")This will generate myriads of combinations, that we can list:

nox --list

* tests-3.8(database='postgres', requests_version='2.29')

* tests-3.8(database='mysql', requests_version='2.29')

* tests-3.8(database='sqlite3', requests_version='2.29')

* tests-3.8(database='postgres', requests_version='2.30')

* tests-3.8(database='mysql', requests_version='2.30')

* tests-3.8(database='sqlite3', requests_version='2.30')

* tests-3.8(database='postgres', requests_version='2.31')

* tests-3.8(database='mysql', requests_version='2.31')

* tests-3.8(database='sqlite3', requests_version='2.31')

* tests-3.9(database='postgres', requests_version='2.29')

* tests-3.9(database='mysql', requests_version='2.29')

* tests-3.9(database='sqlite3', requests_version='2.29')

* tests-3.9(database='postgres', requests_version='2.30')

* tests-3.9(database='mysql', requests_version='2.30')

* tests-3.9(database='sqlite3', requests_version='2.30')

* tests-3.9(database='postgres', requests_version='2.31')

* tests-3.9(database='mysql', requests_version='2.31')

* tests-3.9(database='sqlite3', requests_version='2.31')

* tests-3.10(database='postgres', requests_version='2.29')

* tests-3.10(database='mysql', requests_version='2.29')

* tests-3.10(database='sqlite3', requests_version='2.29')

* tests-3.10(database='postgres', requests_version='2.30')

* tests-3.10(database='mysql', requests_version='2.30')

* tests-3.10(database='sqlite3', requests_version='2.30')

* tests-3.10(database='postgres', requests_version='2.31')

* tests-3.10(database='mysql', requests_version='2.31')

* tests-3.10(database='sqlite3', requests_version='2.31')

Nox sessions can become slow quickly, but you can speed them up a little with a bit of config.

In our case, HTTP requests and the database have likely nothing in common, so we can test the variants separately, avoiding the cartesian product:

import nox

@nox.session(python=["3.8", "3.9", "3.10"])

@nox.parametrize("database", ["postgres", "mysql", "sqlite3"])

def test_db(session, database):

session.install("pytest", "pytest-cov")

session.install("requests")

if database == "postgres":

session.install("psycopg2-binary")

if database == "mysql":

session.install("mysql-connector-python")

session.run("pytest", "--cov=my_awesome_package", "--cov-fail-under=85")

@nox.session(python=["3.8", "3.9", "3.10"])

@nox.parametrize("requests_version", ["2.29", "2.30", "2.31"])

def test_http(session, requests_version):

session.install("pytest", "pytest-cov")

session.install(f"requests=={requests_version}")

session.run("pytest", "--cov=my_awesome_package", "--cov-fail-under=85")

This cuts down the number of combinations:

nox --list

test_db-3.8(database='postgres')

test_db-3.8(database='mysql')

test_db-3.8(database='sqlite3')

test_db-3.9(database='postgres')

test_db-3.9(database='mysql')

test_db-3.9(database='sqlite3')

test_db-3.10(database='postgres')

test_db-3.10(database='mysql')

test_db-3.10(database='sqlite3')

test_http-3.8(requests_version='2.29')

test_http-3.8(requests_version='2.30')

test_http-3.8(requests_version='2.31')

test_http-3.9(requests_version='2.29')

test_http-3.9(requests_version='2.30')

test_http-3.9(requests_version='2.31')

test_http-3.10(requests_version='2.29')

test_http-3.10(requests_version='2.30')

test_http-3.10(requests_version='2.31')And we can now also choose to run only a subset of them by doing nox --session session_name with session_name being test_http or test_db. You can even be more precise and run nox --session test_db-3.8 or nox --session "test_db-3.8(database='postgres')" if you have some debugging to do sith a specific session.

You can also use an alternative backend to pip and venv. This means you can use conda if that's your jam, but since we are talking speed, you'll get a huge perf boosts by pip installing and then using uv:

import nox

# pip install uv in your main venv, then set venv_backend here

@nox.session(python=["3.8", "3.9", "3.10"], venv_backend="uv")

@nox.parametrize("database", ["postgres", "mysql", "sqlite3"])

def test_db(session, database):

session.install("pytest", "pytest-cov")

session.install("requests")

if database == "postgres":

session.install("psycopg2-binary")

if database == "mysql":

session.install("mysql-connector-python")

session.run("pytest", "--cov=my_awesome_package", "--cov-fail-under=85")

@nox.session(python=["3.8", "3.9", "3.10"], venv_backend="uv")

@nox.parametrize("requests_version", ["2.29", "2.30", "2.31"])

def test_http(session, requests_version):

session.install("pytest", "pytest-cov")

session.install(f"requests=={requests_version}")

session.run("pytest", "--cov=my_awesome_package", "--cov-fail-under=85")

This nox session goes from 53 seconds to 8 seconds when moving to uv.

How about:

- Why is his code so bad? He's an undercoverage cop.

- Please subscribe to help covering my bills.

- Nox doesn't buy me socks so subscribe.

- I have no cover-ads so please subscribe to help covering my ass.

Best!

Great series, congrats! (suggestion for a follow up, a similar series on type annotation :))